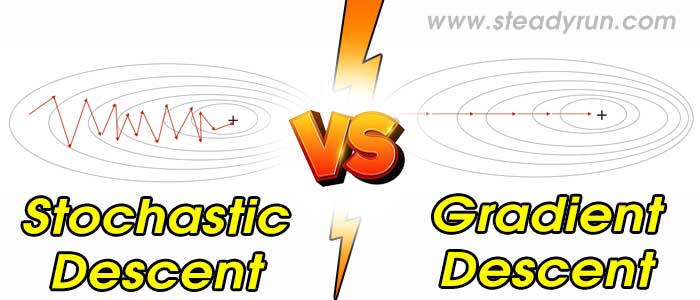

Distinguish, differentiate, compare and explain what is the Difference between Stochastic and Gradient Descent. Comparison and Differences.

Difference between Stochastic and Gradient Descent

Both algorithms are methods for finding a set of parameters that minimize a loss function by evaluating parameters against data and then making adjustments.

In standard gradient descent, youll evaluate all training samples for each set of parameters. This is akin to taking big, slow steps toward the solution.

In stochastic gradient descent, youll evaluate only 1 training sample for the set of parameters before updating them. This is akin to taking small, quick steps toward the solution.

Tags:

Difference between Gradient Descent vs Stochastic

Stochastic vs Gradient Descent

Differences between Gradient Descent vs Stochastic

Image Credits: Freepik